Guided Depth Super-Resolution by Deep Anisotropic Diffusion

Code –

Proceedings –

Proceedings – arXiv

Abstract

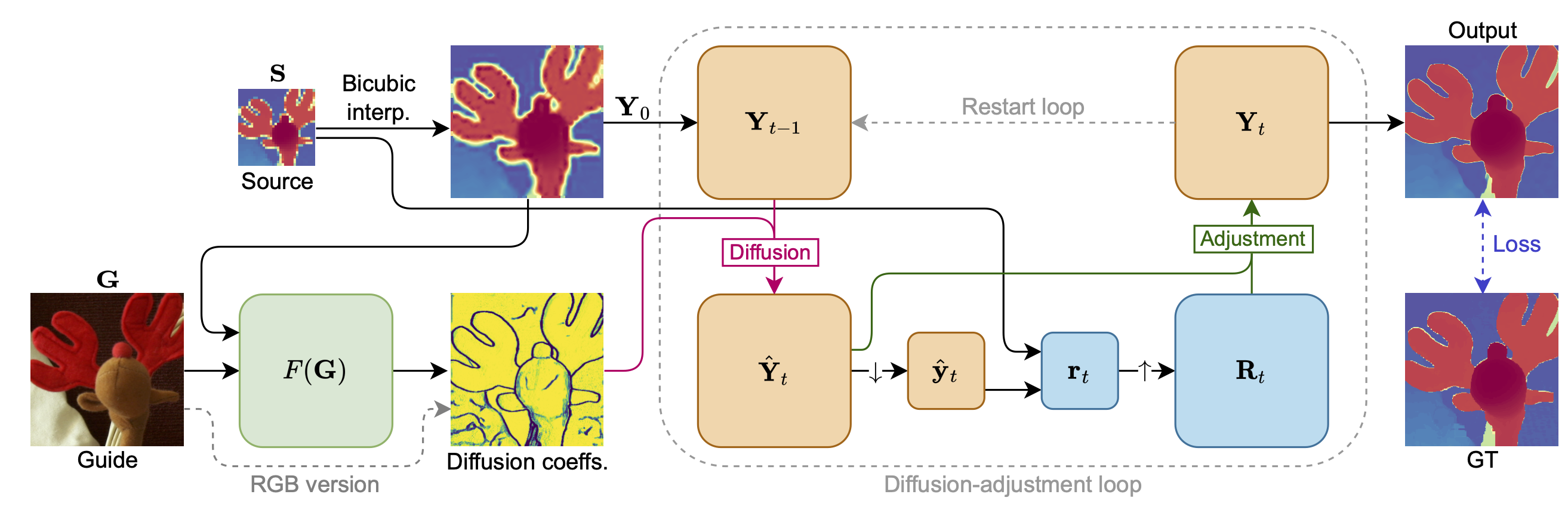

Performing super-resolution of a depth image using the guidance from an RGB image is a problem that concerns several fields, such as robotics, medical imaging, and remote sensing. While deep learning methods have achieved good results in this problem, recent work highlighted the value of combining modern methods with more formal frameworks. In this work we propose a novel approach which combines guided anisotropic diffusion with a deep convolutional network and advances the state of the art for guided depth super-resolution. The edge transferring/enhancing properties of the diffusion are boosted by the contextual reasoning capabilities of modern networks, and a strict adjustment step guarantees perfect adherence to the source image. We achieve unprecedented results in three commonly used benchmarks for guided depth super resolution. The performance gain compared to other methods is the largest at larger scales, such as x32 scaling. Code for the proposed method will be made available to promote reproducibility of our results.

CVPR 2023 Video

Additional Results

| Image | x8 | x32 |

|---|---|---|

| Video link | Video link |

| Video link | Video link |

| Video link | Video link |

| Video link | Video link |

| Video link | Video link |

BibTeX citation

@InProceedings{Metzger_2023_CVPR,

author = {Metzger, Nando and Daudt, Rodrigo Caye and Schindler, Konrad},

title = {Guided Depth Super-Resolution by Deep Anisotropic Diffusion},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {18237-18246}

}